New Delhi| Date: July 25, 2025| Read Time: 4 minutes

Summary:

OpenAI’s CEO Sam Altman has sounded a fresh alarm — this time about AI tools mimicking human voices to trick people. With deepfake audio getting eerily realistic, even your voice could become a tool for financial scams.

Artificial Intelligence is no longer just generating texts or images — it’s now dangerously good at copying your voice. OpenAI’s CEO Sam Altman recently cautioned that people’s voices, extracted from simple audio samples, are being used to impersonate them — especially for financial fraud. The threat isn’t sci-fi anymore.

Article हिंदी में पढ़े-AI की नजर आपके बैंक बैलेंस पर? सैम ऑल्टमैन ने दी Voice Cloning से धोखे की चेतावनी

AI voice fraud India: Can AI Really Steal Your Voice for Money?

Altman shared a critical insight during a recent AI safety meet, warning users to be extremely careful about where and how they share voice data — including casual voice notes or social media uploads. In an era where banks use voice authentication and family members receive SOS calls, a cloned voice could lead to disastrous consequences.

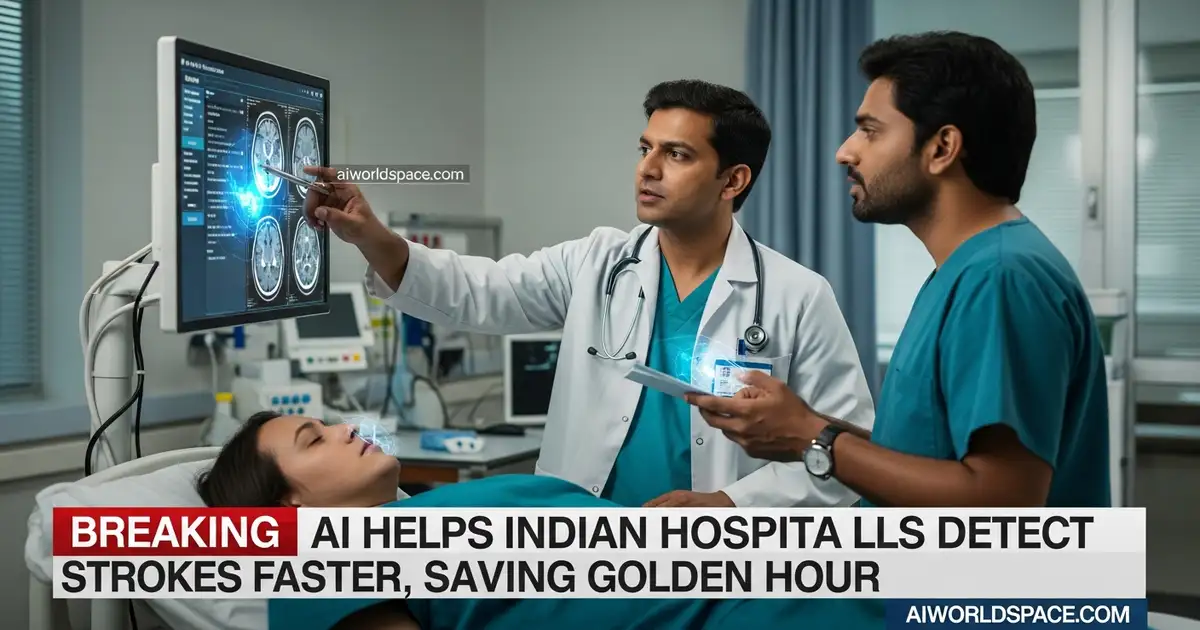

India, in particular, has seen a sharp rise in deepfake-enabled scams. From fake distress calls to fraudulent payment requests, scammers are using cloned voices to create urgency and manipulate emotions — often targeting the elderly or digitally unaware.

What Makes This So Dangerous for Indian Users?

India’s booming UPI ecosystem and rising digital adoption make it a fertile ground for such AI-enabled scams. With just a few seconds of voice — lifted from a YouTube video, Instagram reel, or even a WhatsApp message — fraudsters can build a convincing copy. Combine that with emotional manipulation (“Mummy, please send money fast!”) and you’ve got a recipe for disaster.

This warning comes just as OpenAI continues developing advanced audio tools — such as its Voice Engine — which itself has sparked debate over ethical use and security protocols. Altman’s statement hints at internal concerns within AI labs about how easily their tools could be weaponised by bad actors.

The Bigger Shift: From Visual Deepfakes to Audio Threats

Until now, most of the public panic around AI revolved around visual deepfakes. But voice cloning — easier, faster, and emotionally more impactful — is silently emerging as a more insidious threat. Your identity might not just be stolen through a fake photo — but through a phone call that sounds exactly like you.

As Altman rightly said, “In a world where AI can imitate anyone’s voice, we need to rethink authentication, trust, and privacy from scratch.”

Also Read:

What’s the SOAR Programme? Why Is the Government Pushing AI in Schools?

#ainews,#ailatestnews,#aiupdate,#artifitialintelligence